|

| September 24, 2024 | Volume 20 Issue 36 |

Designfax weekly eMagazine

Archives

Partners

Manufacturing Center

Product Spotlight

Modern Applications News

Metalworking Ideas For

Today's Job Shops

Tooling and Production

Strategies for large

metalworking plants

Enhancing industrial automation with edge AI and sensor technology -- a primer

By Avnet's Engineering Team

Artificial intelligence (AI) will play an important role in the future of industrial automation. The key to success will be in sensor data fusion, which provides the raw information needed by AI models to infer actions.

As we progress, more sensors will embody AI to pre-process data at the very edge. This will blur the line between edge AI running in dedicated processors and sensors that are inherently "intelligent." Intelligence describes what sensor fusion means in an AI-driven, autonomous world. State-of-the-art sensors used in industrial automation will define the industry's direction of travel.

What artificial intelligence (AI) and machine learning (ML) mean

These concepts were first defined in 1955 by Stanford Professor Emeritus John McCarthy as "the science and engineering of making intelligent machines."

Nearly 70 years later, this is now summarized as the simulation of human intelligence in machines. But what constitutes "intelligent?" Software engineers write code to perform specific functions. In industrial applications, this may be to respond to input from a sensor by triggering an actuator, such as a motor or a valve. The performance of the machine is consistent, but it is not intelligent. It doesn't exhibit human cognitive traits of learning from experience, reasoning, or adapting its responses to change. That's where AI comes in.

Machine learning, a category of AI, involves algorithms, which are operational sequences that apply a series of defined steps to an input and produce an output that allows computers to learn from and make predictions based on data. The main components of an ML-enabled machine are data sources, a validated learning model upon which it's trained, an inference engine (processor) where the machine analyzes new data in the context of its learning model to make predictions and decisions, and a platform that enables AI applications to be deployed in real-world settings. It's through ML technologies that machines exhibit human-like learning and adaptive behaviors.

Machine learning is usually executed through deep learning. Artificial neural networks, arranged in layers on processor chips, mimic the neurons in human brains. The artificial neurons, or nodes, are connected to form a network architecture, and the more nodes and layers in a neural network processor, the greater its capabilities.

----------------------------------------

SIDEBAR

According to Harvard Medical School, the human brain has 86 billion neurons with 100 trillion connections between them. In April this year (2024), Intel announced Hala Point, "the industry's first 1.15-billion-neuron neuromorphic system." Hala Point consumes a maximum of 2,600 watts. The human brain consumes about 20 watts.

----------------------------------------

Cloud or edge?

Large industrial plants generate masses of data, sometimes measured in terabytes per day. Machine logs and event data, production metrics, time-series data from hosts of sensors, maintenance records, and supply chain transactions all contribute. In some instances, sensors produce many data points per second, and manufacturing lines may generate thousands of readings every minute.

Sensor types include those that measure distance, speed, torque, force, motion, levels, magnetic fields, gases, humidity, sound, and light (both visible and infrared). Cameras compile images and video from individual sensor points.

Although sensor elements are analog components and detect analog phenomena, such as temperature, their measurements are usually converted to digital data close to the source, often after some analog signal conditioning, for onward processing and transmission.

Integrating AI with Internet of Things (IoT) sensor technology improves data analysis and decision-making processes, enabling smarter industrial systems. However, AI/ML can be computer intensive, so the question arises of where the processing is best performed.

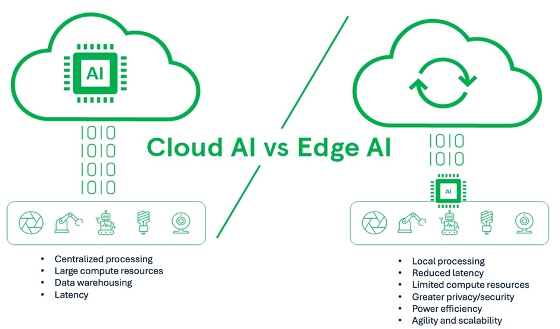

IoT edge computing means processing data at or near its source, rather than in centralized cloud computing resources or data centers. Edge computing can mean anything from processing data locally on a computer at the end of a manufacturing line, or in a network hub or router. However, the ultimate edge is at the sensor itself, within the device or module.

Edge computing brings several benefits over a centralized model. The most notable is a reduction in latency -- the delay between a system's input and its response. Minimizing latency is desirable for both efficiency and safety in industrial systems. Many of these must operate in real time, which means within short, guaranteed, predictable response times that are consistent over time.

Edge computing can enable response times measured in microseconds, compared to centralized processing, which typically has response times of anywhere from a few tens of milliseconds to hundreds of milliseconds. Low latency is crucial for applications requiring immediate responses, such as machinery control and safety monitoring, where even slight delays can lead to inefficiencies or hazards. Predictive maintenance and quality assurance, where low latency allows for immediate detection and correction of defects, are other examples of applications that benefit from low latency.

Further significant benefits of edge computing include eliminating a centralized single point of failure to minimize network disruption, reduced bandwidth requirements and costs of data transmission, greater agility in scaling IoT deployments to meet changing demands, and greater security.

[Credit: Image courtesy of Avnet]

A key challenge of performing AI/ML at the edge is the resource limitations of sensors and the microcontrollers that are connected to them. These limitations include processing power, memory capacity, and energy efficiency.

In practice, hybrid approaches that combine edge computing with the powerful processing resources of data centers often deliver the best cost-performance tradeoff. This can involve training in the cloud and deployment at the edge, or training at the edge then centralizing learning in the cloud. The possibilities are endless, if engineers take advantage of two prominent senor trends.

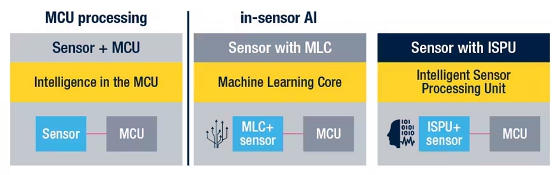

Sensor trends: Functional integration and fusion

Functional integration and sensor fusion are two prominent trends. Functional integration means adding signal conditioning and processing capabilities to a sensor within a single package. Processor architectures may be optimized for running AI algorithms in these applications and TinyML machine learning models may be employed to perform AI inferencing close to the sensor node.

Sensor fusion takes everything one step further, integrating multiple sensors on a chip, again complete with signal conditioning and processors.

There are some sensor technologies where this approach is not technically or economically viable, but sensor fusion is particularly well-suited to micro-electromechanical systems (MEMS) devices. These microsensors and/or actuators are made using modified semiconductor manufacturing processes and have integrated processors. They include temperature and pressure sensors, sound sensors (e.g., microphones), accelerometers, and gyroscopes. Embedding AI technology into these devices, by including trainable ML cores and digital signal processors optimized for running complex AI algorithms, makes it possible to collect, process, and transfer data more efficiently and faster.

For example, STMicroelectronics has recently introduced several product families of low-power MEMS sensors that demonstrate this approach. The company refers to its digital signal processors as 'Intelligent Signal Processor Units' (ISPU), and the ML cores feature up to 512 nodes. This is just one example of what could be capable with these technologies.

Evolution of the MEMS sensor ecosystems for machine learning. [Image by: STMicroelectronics/Courtesy of Avnet]

The outlook for sensors in industrial applications

Sensors will continue to become more integrated. Sensor fusion, which occurs naturally in humans, will enable intelligent systems to function more effectively, and AI/ML technologies will drive greater autonomy in industrial applications. This will lead to improvements in manufacturing efficiency, plant safety, and product quality.

Learn more about Avnet sensors and solutions at avnet.com/wps/portal/us.

Published September 2024

Rate this article

View our terms of use and privacy policy